Discord AI Chatbot: Part 2

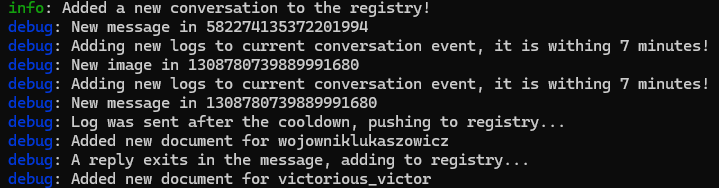

I reached about 20k messages on my data collection script, which I am quite proud of. I had to adjust how I collected data because I had a bug where I would continuously add conversations to the file while the original event log was still being parsed. This had to do with how the async logic was being handled. Essentially, I cleared the event log after the event log was parsed. However, due to the write operations being made to the database for each message in the log, it would take over 10 seconds for an event log of 100 to be processed. After processing the entire event log, I cleared it for more information.

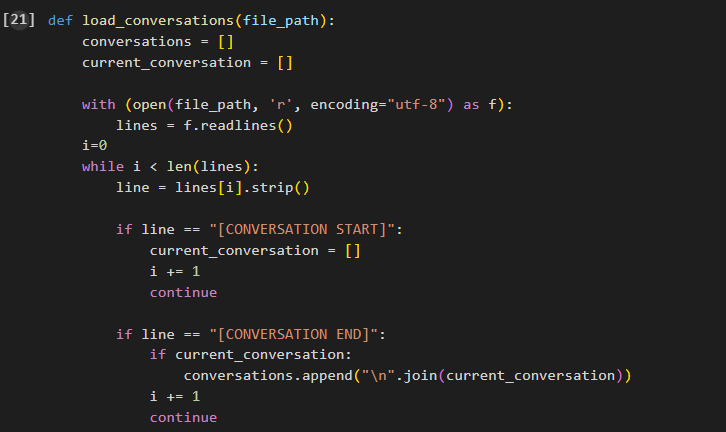

After fixing it, I have literally had the data collection script online for 48 hours, and it seems I get about 10k messages per day, which isn't amazing, but it can easily be scaled up to my liking. After I got about 12k messages, I decided to start training. Now, the thing about me is I am way too scared to learn about the true depths of deep learning, so I look to ChatGPT to assist me. The issue with this is it isn't very good at coding and can lead you down an incorrect rabbit hole. I still knew enough to set up my coding environment with WSL, CUDA, and Tensorflow. The real issue started when I tried to use my first pre-trained model, Falcon-7B. Supposedly, it's not compatible with Tensorflow, and ChatGPT was pretty much gaslighting me the entire time. On the spot, I chose to go with GPT2 for fine-tuning as it was similar and had native support for Tensorflow.

When everything was set up, I clicked train and ran into my first issue, my GPU VRAM could not handle the training whatsoever. I have a 4060ti, so this was odd to see. I messed with the batch size, changed the model size, and changed the contextual limit, but nothing was helping. I was getting quite upset because I wanted everything to be cheap or hosted locally. I decided to go back to the basics and transfer my code over to Google Colab. It was relatively easy to import everything, and I put my dataset on Google Drive, so it was persistent if I ever restarted my runtime. I had to buy some processing power, so I went for the "pay as you go" option. You buy 100 computer units, which I suppose is the new cryptocurrency, and then I unlock GPUs like the T4 and A100.

My first training session did not take too long, but when I tried to make an inference, it gave me an annoying error. I had to convert my input tensor to float16 because that is what I set it to during training. But even converting it didn't work. I downloaded the model on my computer and tried to make an inference there, and it sort of worked. I got this weird output where it just gave "[:[:[:[:[:[:[:[:[:[". I was confused as to why I did not get a warning on my local system but got one on Google Colab. After some more research (ChatGPT), I decided to train again but turn off the float16 precision setting. It worked on Google Colab now, but I was still getting that annoying output. I tried other inputs, and it actually gave me some interesting stuff.

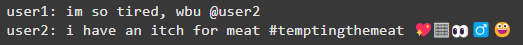

I was the one who plugged in user 1, but the bot gave user 2. Insanely weird stuff. After going to sleep that night and returning to it after Math class, it wasn't working like before. I was getting fed up with this, so I decided to do some research without ChatGPT and came across a tutorial that was doing the same kind of thing, so I altered his code a bit to fit my dataset, and now I am training this as I type this blog. The training process is a lot longer, and the training loss has looked a lot different from what I got before. I have been training for about 2 hours now, so I am curious to see how this goes.